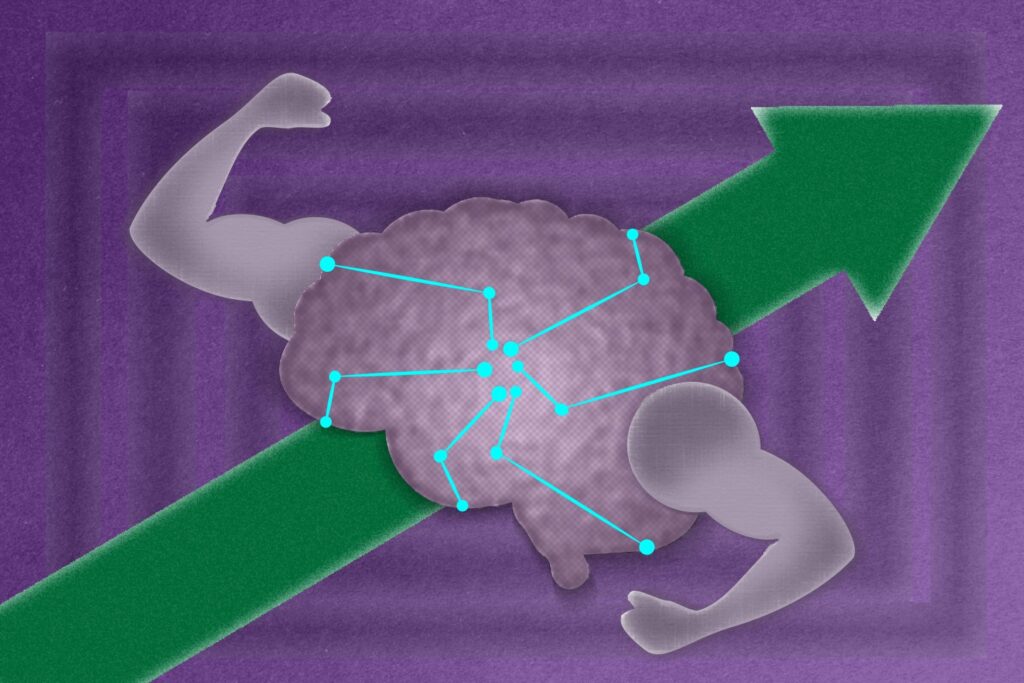

Even networks long considered “untrainable” can learn effectively with a bit of a helping hand. Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have shown that a brief period of alignment between neural networks, a method they call guidance, can dramatically improve the performance of architectures previously thought unsuitable for modern tasks.

Their findings suggest that many so-called “ineffective” networks may simply start from less-than-ideal starting points, and that short-term guidance can place them in a spot that makes learning easier for the network.

The team’s guidance method works by encouraging a target network to match the internal representations of a guide network during training. Unlike traditional methods like knowledge distillation, which focus on mimicking a teacher’s outputs, guidance transfers structural knowledge directly from one network to another. This means the target learns how the guide organizes information within each layer, rather than simply copying its behavior. Remarkably, even untrained networks contain architectural biases that can be transferred, while trained guides additionally convey learned patterns.

“We found these results pretty surprising,” says Vighnesh Subramaniam ’23, MEng ’24, MIT Department of Electrical Engineering and Computer Science (EECS) PhD student and CSAIL researcher, who is a lead author on a paper presenting these findings. “It’s impressive that we could use representational similarity to make these traditionally ‘crappy’ networks actually work.”

Guide-ian angel

A central question was whether guidance must continue throughout training, or if its primary effect is to provide a better initialization. To explore this, the researchers performed an experiment with deep fully connected networks (FCNs). Before training on the real problem, the network spent a few steps practicing with another network using random noise, like stretching before exercise. The results were striking: Networks that typically overfit immediately remained stable, achieved lower training loss, and avoided the classic performance degradation seen in something called standard FCNs. This alignment acted like a helpful warmup for the network, showing that even a short practice session can have lasting benefits without needing constant guidance.

The study also compared guidance to knowledge distillation, a popular approach in which a student network attempts to mimic a teacher’s outputs. When the teacher network was untrained, distillation failed completely, since the outputs contained no meaningful signal. Guidance, by contrast, still produced strong improvements because it leverages internal representations rather than final predictions. This result underscores a key insight: Untrained networks already encode valuable architectural biases that can steer other networks toward effective learning.

Beyond the experimental results, the findings have broad implications for understanding neural network architecture. The researchers suggest that success — or failure — often depends less on task-specific data, and more on the network’s position in parameter space. By aligning with a guide network, it’s possible to separate the contributions of architectural biases from those of learned knowledge. This allows scientists to identify which features of a network’s design support effective learning, and which challenges stem simply from poor initialization.

Guidance also opens new avenues for studying relationships between architectures. By measuring how easily one network can guide another, researchers can probe distances between functional designs and reexamine theories of neural network optimization. Since the method relies on representational similarity, it may reveal previously hidden structures in network design, helping to identify which components contribute most to learning and which do not.

Salvaging the hopeless

Ultimately, the work shows that so-called “untrainable” networks are not inherently doomed. With guidance, failure modes can be eliminated, overfitting avoided, and previously ineffective architectures brought into line with modern performance standards. The CSAIL team plans to explore which architectural elements are most responsible for these improvements and how these insights can influence future network design. By revealing the hidden potential of even the most stubborn networks, guidance provides a powerful new tool for understanding — and hopefully shaping — the foundations of machine learning.

“It’s generally assumed that different neural network architectures have particular strengths and weaknesses,” says Leyla Isik, Johns Hopkins University assistant professor of cognitive science, who wasn’t involved in the research. “This exciting research shows that one type of network can inherit the advantages of another architecture, without losing its original capabilities. Remarkably, the authors show this can be done using small, untrained ‘guide’ networks. This paper introduces a novel and concrete way to add different inductive biases into neural networks, which is critical for developing more efficient and human-aligned AI.”

Subramaniam wrote the paper with CSAIL colleagues: Research Scientist Brian Cheung; PhD student David Mayo ’18, MEng ’19; Research Associate Colin Conwell; principal investigators Boris Katz, a CSAIL principal research scientist, and Tomaso Poggio, an MIT professor in brain and cognitive sciences; and former CSAIL research scientist Andrei Barbu. Their work was supported, in part, by the Center for Brains, Minds, and Machines, the National Science Foundation, the MIT CSAIL Machine Learning Applications Initiative, the MIT-IBM Watson AI Lab, the U.S. Defense Advanced Research Projects Agency (DARPA), the U.S. Department of the Air Force Artificial Intelligence Accelerator, and the U.S. Air Force Office of Scientific Research.

Their work was recently presented at the Conference and Workshop on Neural Information Processing Systems (NeurIPS).